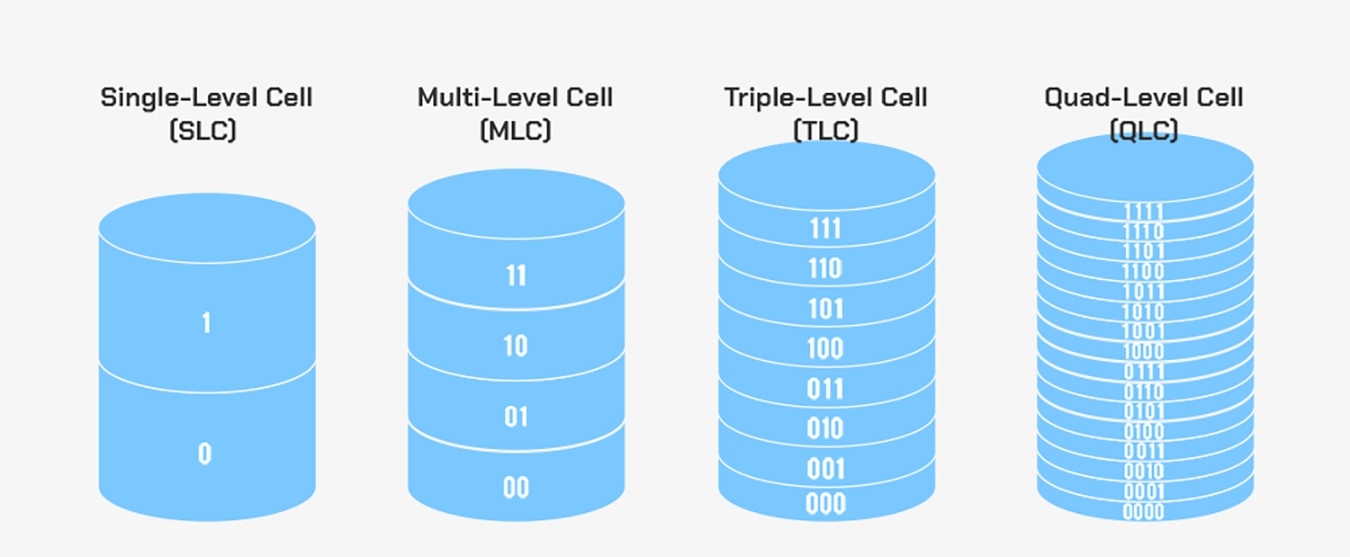

We are in an era of exploding data volume. AI training, cloud service operations, high-definition video archiving, and the demand to replace traditional mechanical hard drives are all driving an almost limitless desire for storage capacity. However, the miniaturization of semiconductor manufacturing processes is gradually approaching physical limits, making it crucial to reduce cost per GB by increasing storage density. On the path of technological evolution, from SLC storing 1 bit per cell, to MLC with 2 bits, TLC with 3 bits, and QLC with 4 bits, each step represents a new balance between cost and reliability. Today, PLC (Penta-Level Cell) technology, storing 5 bits of data per cell, is seen as a key development direction for next-generation high-density storage.

NAND Flash Basics: How Charge Stores Data

NAND Flash is a type of non-volatile memory, meaning it can retain data for a long time even after power is off. Its core functionality relies on precise control of the amount of charge within each memory cell. The most basic memory cell can be understood as a special transistor with an insulating layer called a “floating gate” or a similar functional “charge trap layer.” The unique aspect of this layer is that injected electrons are effectively isolated, allowing them to be stored for a long time, enabling persistent data storage.

Data Recording Principle. The specific method of data recording is characterized by the “threshold voltage.” Threshold voltage is the minimum voltage required to turn on the transistor. By injecting different amounts of electrons into the floating gate, the electrical characteristics of the transistor are altered, thereby changing its threshold voltage. The controller applies a reference voltage and detects whether the transistor turns on, thus determining the current threshold voltage state of the cell. Each specific voltage state corresponds to a unique data encoding. The essence of “multi-level cell” technology is to precisely define and distinguish more than two threshold voltage states within a single cell, thereby storing more than one bit of data.

Write, Read, and Erase. Based on the above principles, operations on NAND Flash are mainly divided into three types: write, read, and erase. The write operation, also called programming, is achieved by injecting charge into the floating gate of the cell. The erase operation removes the charge from the floating gate, restoring the cell to its initial state. It is particularly important to note that the smallest erase unit for NAND Flash is the “block,” while programming and reading can be performed on the smaller “page” unit. During a read operation, the controller applies a series of precise reference voltages to the cell. By sensing whether the transistor turns on at different voltages, it determines the threshold voltage corresponding to the currently stored charge, ultimately decoding the stored data.

The Rise of 3D NAND. As manufacturing processes continue to shrink, planar NAND Flash encountered physical bottlenecks and reliability issues with further size reduction. To continue increasing capacity, the industry turned to three-dimensional stacking technology, known as 3D NAND. 3D NAND stacks memory cells vertically, like building a skyscraper, achieving a multiplication of storage density on the same planar area. However, beyond three-dimensional stacking, increasing the number of bits stored per memory cell remains another fundamental technological path to further enhance storage density and reduce costs.

Evolution of Storage Technology: From SLC to QLC

The core goal of the evolution of storage technology is to continuously increase storage density to reduce cost per GB. This goal is primarily achieved by increasing the number of bits stored per memory cell. The following outlines the technological development path from SLC to QLC.

SLC: The Benchmark for Performance and Reliability

SLC stands for Single-Level Cell, storing 1 bit of data per cell. This 1 bit of data corresponds to two charge states, typically represented as 0 and 1. Since only two states need to be distinguished, SLC offers extremely high read/write speeds, the longest lifespan, and the strongest data reliability. Its disadvantage is the lowest storage density, resulting in the highest cost per GB. Therefore, SLC is mainly used in enterprise servers and industrial fields where performance and reliability are paramount.

MLC: The Balance Point of Performance and Cost

MLC stands for Multi-Level Cell, storing 2 bits of data per cell, corresponding to four charge states. By storing more data in each cell, MLC achieves double the storage capacity of SLC on the same chip area, significantly reducing cost. Although its performance, lifespan, and reliability are not as high as SLC, they reach a good balance. MLC was long the mainstream choice for high-end consumer solid-state drives and enterprise storage.

TLC: The Mainstream Choice for the Consumer Market

TLC stands for Triple-Level Cell, storing 3 bits of data per cell, corresponding to eight charge states. TLC further expands the advantages of storage density and cost reduction, becoming the absolute mainstream in the current consumer SSD market. With advanced controller algorithms and error correction technologies, its endurance already meets the needs of the vast majority of daily applications.

QLC: The Practice of High-Density Storage

QLC stands for Quad-Level Cell, storing 4 bits of data per cell, corresponding to sixteen charge states. The advantage of QLC lies in its high storage density and lower cost, making it very suitable for building large-capacity solid-state drives. However, its disadvantages are also more obvious, including slower write speeds and a further shortened lifespan compared to TLC. Currently, QLC is mainly used in scenarios with low write performance requirements, such as large-capacity external storage and cold data storage in data centers.

Core Challenges Behind the Evolution

The evolution from SLC to QLC is not a simple linear addition. As the number of bits per cell increases, the number of voltage states that need to be precisely distinguished grows exponentially, from 2 to 16. This means the voltage window used to distinguish different states is compressed extremely narrow, making the requirements for charge control precision, signal anti-interference capability, and error correction technology extremely stringent. The development of PLC technology is the next step directly facing this core challenge.

The PLC Technology

PLC, or Penta-Level Cell, is the next stage in the evolution of NAND Flash technology. Its core characteristic is pushing storage density to new heights, but it also faces unprecedented engineering challenges.

Technical Definition of PLC

PLC stands for Penta-Level Cell, meaning each memory cell stores 5 bits of data. These 5 bits correspond to 32 different threshold voltage states. Compared to QLC’s 16 states, PLC needs to precisely define twice the number of voltage levels within the same physical voltage window. Currently, this technology is still in the development and verification stage, with leading manufacturers like Solidigm firstly demonstrating prototype products, indicating the future direction of high-density storage.

Primary Challenge: Narrow Voltage Window

The fundamental challenge facing PLC technology stems from the physical level. To distinguish 32 voltage states, the voltage difference between each adjacent state must be compressed to a very small value. This makes the voltage window extremely narrow and the error tolerance drops sharply. Any tiny charge fluctuation, transistor characteristic variation, or electronic noise during reading could cause the controller to misjudge the voltage state, leading to data errors. It can be said that the feasibility boundary of PLC directly depends on whether this signal-to-noise ratio challenge can be effectively overcome.

Engineering Breakthrough

Facing extremely high native bit error rates, the practicality of PLC highly depends on significant advances in storage controllers and error correction technology. Traditional error correction codes can no longer meet the demands; more powerful Low-Density Parity-Check codes combined with soft-decision decoding technology must be adopted. Soft-decision decoding does not simply judge the signal as 0 or 1, but infers the most likely data value through probability calculations, significantly improving error correction capability. Additionally, the controller needs to have intelligent read-retry mechanisms. When the initial read fails, it can dynamically adjust the reference voltage for multiple reads to find the correct signal point. These complex algorithm processes place very high demands on the controller’s computational power.

Impact on Performance and Lifespan

The characteristics of PLC technology also directly affect its performance and reliability. Because finer control of charge injection is needed to match 32 voltage states, the write process requires more program-verify cycles, leading to significantly slower write speeds compared to QLC and TLC. In terms of lifespan, more frequent and precise charge operations accelerate the aging of memory cells. Therefore, the native endurance of PLC flash is expected to be lower than QLC. To compensate for this weakness in practical applications, more compensation measures are needed at the system level, such as configuring higher over-provisioning, adopting more aggressive data wear-leveling algorithms, and relying on SLC caching to absorb burst write loads.

Differences Among the Five Memory Cell Technologies

The table below clearly shows the differences in key metrics among the five NAND Flash memory cell technologies from SLC to PLC.

| Technology Type | Bits per Cell | Number of States | Relative Cost | Relative Endurance | Main Advantage | Typical Application Scenarios |

|---|---|---|---|---|---|---|

| SLC | 1 bit | 2 | Highest | Highest | Ultra-high speed, very long lifespan, high reliability | Enterprise mission-critical, high-speed cache |

| MLC | 2 bits | 4 | High | High | Excellent balance of performance and cost | Enterprise SSD, high-end consumer SSD |

| TLC | 3 bits | 8 | Medium | Medium | Mainstream choice for cost and capacity | Mainstream consumer SSD, mobile devices |

| QLC | 4 bits | 16 | Low | Relatively Low | High storage density, low cost | Large-capacity consumer SSD, data center cold storage |

| PLC | 5 bits | 32 | Expected to be Low | Expected to be Low | Ultimate storage density, lowest cost | Ultra-large scale cold data archiving |

From the table, a clear trend can be seen: as the number of bits stored per cell increases, storage density and cost-effectiveness continuously improve, but this comes at the expense of read/write speed and cell lifespan. Therefore, different technologies are suited for distinctly different scenarios. SLC serves areas with the most stringent demands for performance and reliability, while PLC’s goal is to provide a highly cost-effective storage solution for massive cold data within acceptable performance and lifespan limits.

Value, Positioning, and Future of PLC Technology

Ultimate Cost and Density Advantage. The fundamental driving force behind PLC technology development is the pursuit of ultimate storage density and cost-effectiveness. By accommodating 5 bits of data per memory cell, PLC can provide higher storage capacity than QLC on the same chip wafer area. This directly translates to lower cost per GB. Its primary target market is to replace areas currently dominated by high-capacity mechanical hard drives, offering a cold data storage solution for hyperscale data centers that has advantages in power consumption per unit volume, access speed, and physical footprint.

Severe Challenges Faced. The mass production and application of PLC technology face multiple severe challenges. First, distinguishing 32 voltage states requires unprecedented control precision, leading to low initial production yields and extremely complex quality control. Second, to achieve reliable data storage, the controller needs powerful real-time computing capabilities to run complex error correction algorithms, increasing the design difficulty and power consumption of the main controller chip. Finally, the native endurance of PLC flash is low and must be compensated for by system-level techniques, such as setting larger over-provisioning areas, adopting more efficient data wear leveling and garbage collection mechanisms. These all increase the design complexity of the overall solution.

Application Scenario Positioning. Based on its technical characteristics, the application scenario positioning of PLC is very clear and specific. It is highly suitable for ultra-cold data archiving scenarios where write operations are extremely rare and read access frequency is very low. Examples include deep archive storage tiers in cloud services, long-term data backups for regulatory compliance, historical log files, and the preservation of digital assets like medical images. Conversely, PLC is completely unsuitable for write-intensive tasks such as operating systems, databases, and frequently updated application data. Its role is to be the lowest-cost, highest-capacity foundational tier in the data storage ecosystem.

Future Outlook. The commercial adoption of PLC technology relies not only on the maturity of the flash memory chips themselves but also on the coordinated development of controller chips, firmware algorithms, and even the entire data storage system ecosystem. It represents the exploration of the physical limits by NAND Flash technology under the current architecture. The industry generally believes that PLC may be approaching the practical limit of cell-level density improvement based on the charge storage principle. Future progress will rely more on the continued increase of 3D stacking layers, and leveraging technological innovations like artificial intelligence to optimize data management and error correction efficiency, thereby achieving system-level breakthroughs in performance and reliability.

PLC NAND Flash represents an important challenge to the physical limits under the current architecture for storage technology. It is a natural evolution driven by the continuous pursuit of lower cost and higher density storage needs. Although its inherent characteristics determine that it will primarily serve specific fields, through continuous optimization of controller algorithms and system-level solutions, PLC is expected to play an indispensable role in the future data storage ecosystem by accommodating massive amounts of cold data.